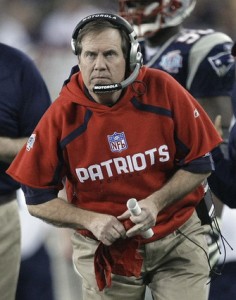

On the heels of the Super Bowl, it's hard not to think about the role of referees in sports. I love instant replay technology. Some of those calls are ridiculously hard to make. Such technology helps us to keep everything on the up and up. Meanwhile, because football coaches are only allotted a certain number of Challenge Calls each half and they are penalized if they get it wrong helps keep everyone honest - and the game clipping along at a reasonable pace.

We're dealing with a different kind of sport when it comes to wine. As a former springboard diver, I argue wine is more like a diving competition where 5 judges have a say in a person's performance. Is this fair?

On the heels of the Super Bowl, it's hard not to think about the role of referees in sports. I love instant replay technology. Some of those calls are ridiculously hard to make. Such technology helps us to keep everything on the up and up. Meanwhile, because football coaches are only allotted a certain number of Challenge Calls each half and they are penalized if they get it wrong helps keep everyone honest - and the game clipping along at a reasonable pace.

We're dealing with a different kind of sport when it comes to wine. As a former springboard diver, I argue wine is more like a diving competition where 5 judges have a say in a person's performance. Is this fair?

On the one hand, whenever you are dealing with a subjective entity you have to go with a panel of judges to render a conclusion. In the case of diving, whenever my mom was judging me, my scores were lower. Naturally she feared giving me too high a score so as to show favoritism. Fortunately, they drop the high and the low scores on each panel and average the middle 3. It's not a perfect system but it's the closest thing to fair you can get.

It doesn't always work that way for wine. It seems that each competition has its own judging process, typically on either a 100 point or 20 point scale. Rules are established as to what attributes a wine must have to score a certain rating, but I don't think they ever drop a score. Goodness knows they should! There are a lot of external factors that make this particular 'sport' a challenge to referee.

A recent study conducted by Robert T. Johnson over three years showed "of approximately 65 judging panels... just 30 panels achieved anything close to similar results, with the data pointing to "judge inconsistency, lack of concordance--or both" as reasons for the variation. The phenomenon was so pronounced, in fact, that one panel of judges rejected two samples of identical wine, only to award the same wine a double gold in a third tasting." (Source: Wines & Vines) The abstract of the official report made an interesting point, too: wines that are bad, are consistently rated poorly; it's the good to great wines that prove more of a challenge to judge fairly.

So what do scores really mean? Who are these so-called "experts"? How can we know they don't suffer from palate fatigue after tasting 100 some odd wines in a given time period? I know from personal tasting experience my judgment is definitely questionable by 6pm on Tuesday Tasting Day at the shop, as compared to when my energy, mind and palate is "fresh" at 10am. Am I drunk? Not at all. We spit so you don't have to. The truth is, no matter how professional you are, circumstances dictate your experience with a wine. Subjectivity is the only writing on the wall.

What are we to do? Well, the average consumer can rest assured there are numerous folks out there in the trade assessing the quality of a wine. Consumers are automatically tasting the better stuff on the market - even if it isn't your preference. But for me this kind of study simply underscores what I'm always saying: context is everything; scores are relative. Find your congenial wine guru after giving a few "judges" a try based on knowledge and compatibility and then taste from their cup of suggestions.

Do you think wine judging is a worthwhile undertaking or too subjective to have much merit?